Bokeh-licious: Shallow Depth of Field for Images using Portrait Segmentation and Depth Estimation

Dec 017, 2022 • 12 min • Computer Vision • Source

Have you ever admired those magazine-cover quality photos with the subject in crisp focus and a beautifully blurred background? The seemingly effortless way the subject pops from the image draws the eye and creates visual interest. Photographers achieve this by using shallow depth of field - minimizing the portion of the image that appears in focus. But mastering a shallow depth of field can be deceptively tricky, especially for smartphone cameras with tiny lenses. That's why in this project, I set out to develop an algorithmic approach to artificially generate this prized photographic effect.

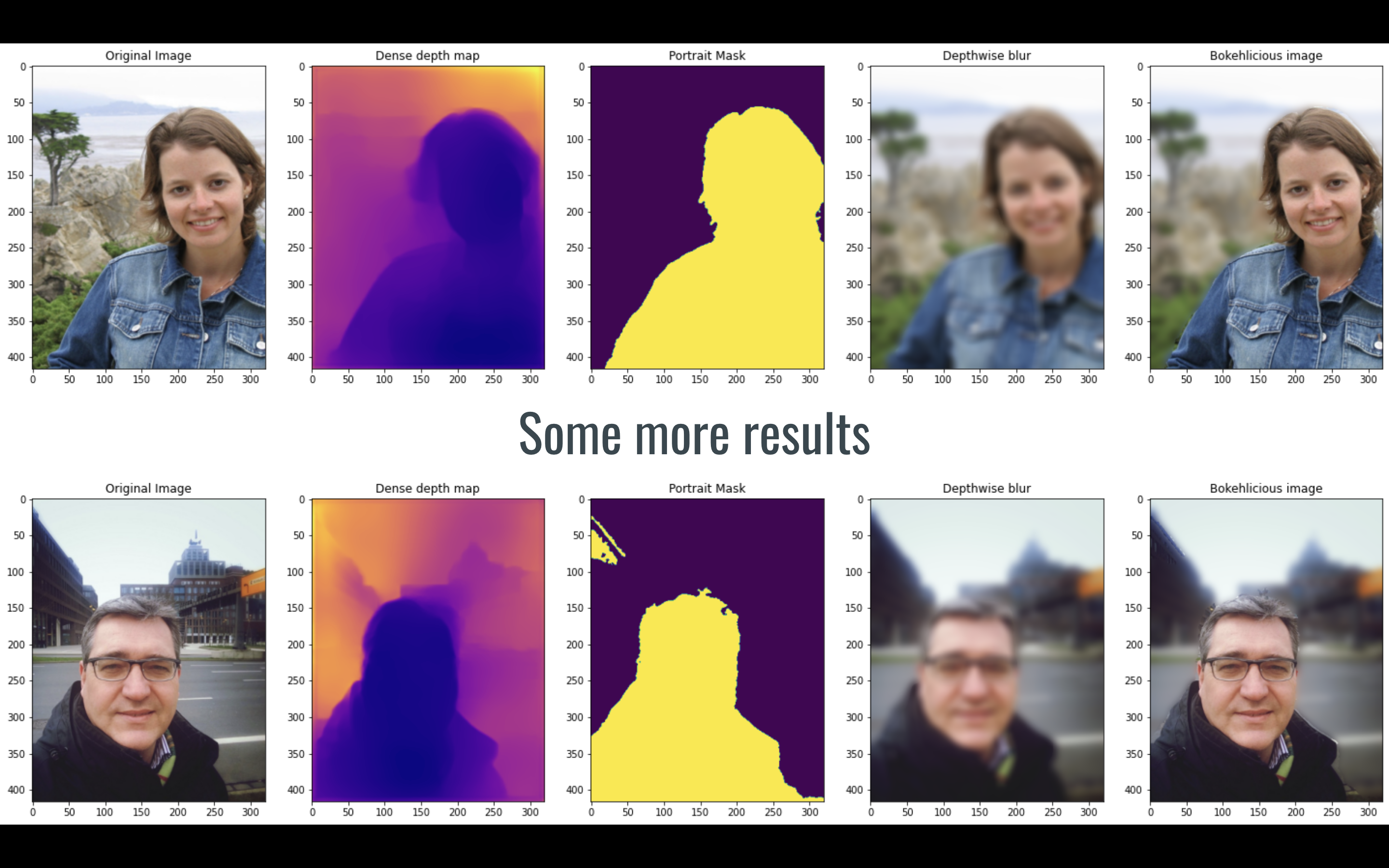

By combining the powers of portrait segmentation and depth estimation - both enabled by deep learning - my method can take a standard photo and blur the background in a realistic, non-destructive manner. First, a deep neural network scans the image and detects the key subject, whether it's a person, pet or product. Next, a depth estimation model predicts the relative distance of objects in the scene. Finally, using the results from these models, my algorithm selectively applies blur to the background while keeping the subject crisp and clear. Think of it like a depth-aware digital paintbrush softly blurring some areas and sharpening others.

While this novel approach sounds complex, I'll break it down step-by-step in this blog post so anyone can understand the concepts and code. I'll also showcase some before-and-after examples to highlight the visual impact. From amateurs to expert photographers, I think you'll be amazed by how well this technique emulates a shallow depth of field. So let's dive in and bring that professional bokeh look to any image!

Method

With the goal of artificially adding shallow depth of field, my approach relies on two main pillars - portrait segmentation and monocular depth estimation. Portrait segmentation allows us to identify and isolate the main subject, while depth estimation predicts the relative distance of objects in the image.

Datasets

A key ingredient of any deep learning system is the training data. For this project, I leverage two public datasets tailor-made for portrait segmentation and depth estimation respectively.

- For honing my portrait segmentation model, I utilize the PFCN dataset containing 1800 high resolution self-portraits from Flickr. This provides over 1000 examples of people as the main subjects, along with pixel-level masks outlining the person's shape.

- To teach my depth estimation model to understand object distances, I tap into the diverse NYU Depth dataset. Collected by NYU researchers, it consists of over 1000 aligned pairs of RGB images and corresponding depth maps. The depth maps encode object distances, allowing the model to learn the relationship between appearance and depth cues.

- Load input image

- Compute its edge map using Sobel filter and append it as a 4th channel

- Pass this image to the portrait segmentation model to generate the portrait mask

- Pass the same image to the depth estimation model and generate the depth map

- Discretize the depth information into buckets such as 0 - 0.2, 0.2 - 0.4 and so on.

- Apply a progressively stronger Gaussian blur to these buckets. This would result in the first bucket i.e. one containing pixels with depth in range 0 - 0.2 to be blurred less than the next bucket containing the pixels from the depth in range 0.2 - 0.4 and so on.

- Stack the pixels from all buckets together to form a single image.

- Superimpose the portrait mask onto the previous stacked output resulting in the final image with a rich bokeh.

Portrait Segmentation

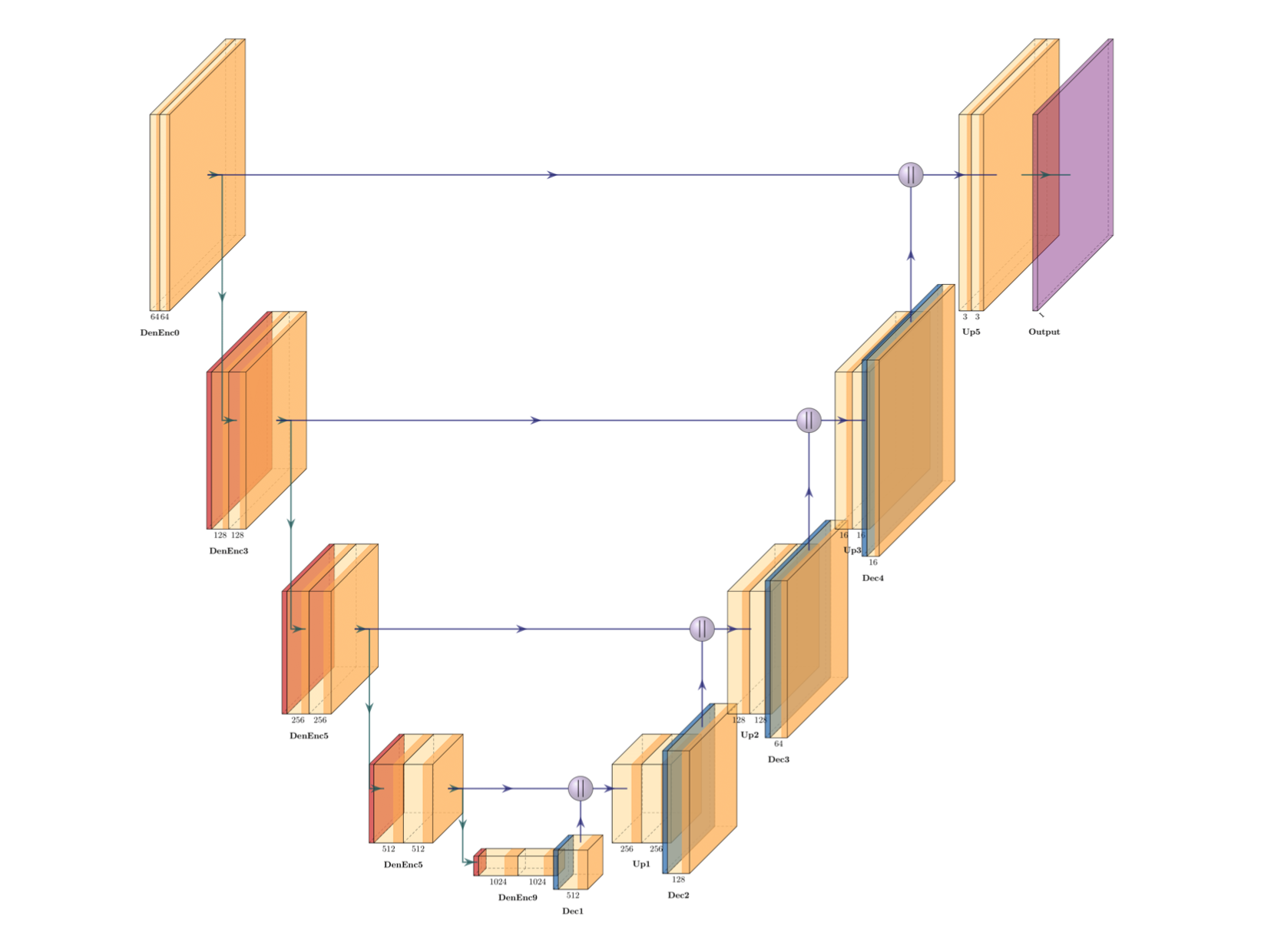

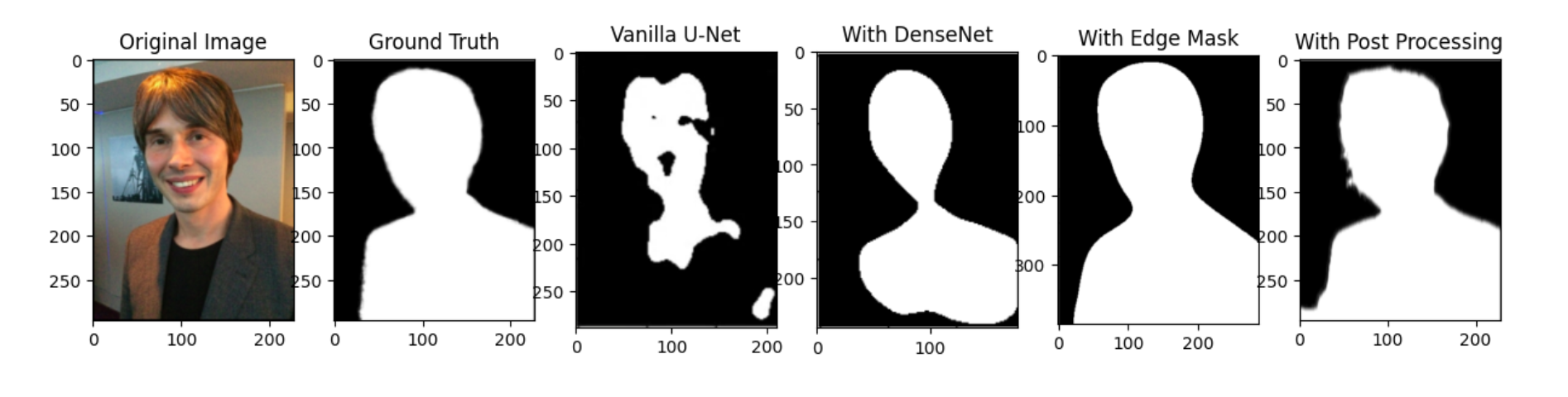

Isolating the main subject is crucial for keeping them in sharp focus against a blurred background. To enable precise portrait extraction, I employ a U-Net architecture enhanced with DenseNet encoders and custom augmentations.

Starting with a vanilla U-Net, I found it struggled to fully segment portrait images after limited training. To boost its capabilities, I leveraged a DenseNet pre-trained on ImageNet as the encoder. This immediately improved results by initializing the model with useful visual features.

However, some gaps still existed in the segmentation. I tackled this by providing an additional input to the network - the edge mask of the input image. Detecting strong edges on the portrait directs the model's attention to important shape contours.

This edge-aware approach, combined with longer training, enabled tight segmentation of subjects from challenging backgrounds. The model learns to rely on both textural cues from DenseNet and structural cues from the edges for robust extraction.

After prediction, I further refine the masks using image processing techniques like morphological operations. This helps fill any residual holes and smooth the edges.

The final precise masks allow isolated blurring of backgrounds without accidentally catching the subject. Segmentation accuracy is critical to realistically emulate depth of field effects.

Depth Estimation

Similar to portrait segmentation, I attempted to achieve depth estimation with a U-Net architecture and a DenseNet encoder. However, the specified task turned out to be difficult for the model to achieve in the limited resources and time frame available.

The outputs seemed to learn about the intensity values of the input instead of estimating the depth. I intuit the problem lies with the amount of data I chose for training the model in a limited resource environment (Google Colab). Studies have mentioned that they were required to train the model for at least 24 hours on multiple GPUs, which was not readily available to us.

I compared the results of the outputs against the pre-trained model and finally resorted to using the pre-trained model to employ the progressive blur based on depth in the final processing pipeline

Training Environment

I trained the models on Google Colab. This allowed us to take advantage of the parallel computing capabilities of the their CUDA-enabled NVIDIA GPUs, which significantly reduced the training time compared to using a CPU-only machine. Overall, the results demonstrate the effectiveness of using a CUDA-enabled GPU for training deep-learning models on vision workloads and highlight the importance of considering hardware choices when optimizing training times.

Final result and conclusion:

In conclusion, shallow depth of field can be achieved in portrait photography by using depth estimation and portrait segmentation techniques to identify the areas of the image that should be in focus and those that should be blurred. By applying a progressive blur to the image based on the estimated depth of each pixel, it is possible to create the illusion of shallow depth of field and draw the viewer's attention to the subject of the photograph. This technique can be useful for creating more dynamic and engaging portrait images.

Learnings:

- Successfully outperforming the baseline implementation for portrait segmentation underscored the importance of fine-tuning and extensive training for more complex tasks like depth estimation.

- Experimentally observing the impact of batch size adjustments on learning rates provided valuable insights into optimization strategies for neural network training.

- Implementing a progressive blur based on object depth revealed its potential in creating a natural-looking shallow depth of field effect, enhancing visual realism.

- Recognizing the pivotal role of batch normalization in stabilizing and aiding convergence in neural networks emphasized its significance in network training and performance improvement.

- Leveraging a pre-trained model in the encoder demonstrated superior performance compared to training from scratch, highlighting the efficiency and effectiveness of fine-tuning.

- Understanding the critical role of selecting the appropriate loss function in guiding the learning process proved essential for effective network training and task resolution.

References:

- K. Purohit, M. Suin, P. Kandula, and R. Ambasamudram, "Depth-Guided Dense Dynamic Filtering Network for Bokeh Effect Rendering," 2019 IEEE/CVF International Conference on Computer Vision Workshop (ICCVW), 2019, pp. 3417-3426, doi: 10.1109/ICCVW.2019.00424.

- Sergio Orts Escolano and Jana Ehman, "Accurate Alpha Matting for Portrait Mode Selfies on Pixel 6", Google AI Blog, Link

- Ignatov, A., Patel, J., and Timofte, R., “Rendering Natural Camera Bokeh Effect with Deep Learning”, arXiv e-prints, 2020.

- Li, Z., & Snavely, N. (2018). MegaDepth: Learning Single-View Depth Prediction from Internet Photos. 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2041-2050.

- T. -Y. Kuo, Y. -C. Lo and Y. -Y. Lai, "Depth estimation from a monocular outdoor image," 2011 IEEE International Conference on Consumer Electronics (ICCE), 2011, pp. 161-162, doi: 10.1109/ICCE.2011.5722517.

- Marcela Carvalho, Bertrand Le Saux, Pauline Trouvé-Peloux, Andrés Almansa, Fréderic Champagnat. On regression losses for deep depth estimation. ICIP 2018, Oct 2018, ATHENES, Greece. Link

- Shen, X., Hertzmann, A., Jia, J., Paris, S., Price, B., Shechtman, E. and Sachs, I. (2016), Automatic Portrait Segmentation for Image Stylization. Computer Graphics Forum, 35: 93-102. Link

- Portrait Segmentation Dataset

- NYU Depth V2 Dataset

My other posts:

Sep 15, 2023 • 2 min • Non-profit

June 15, 2018 • 2 min • Environment